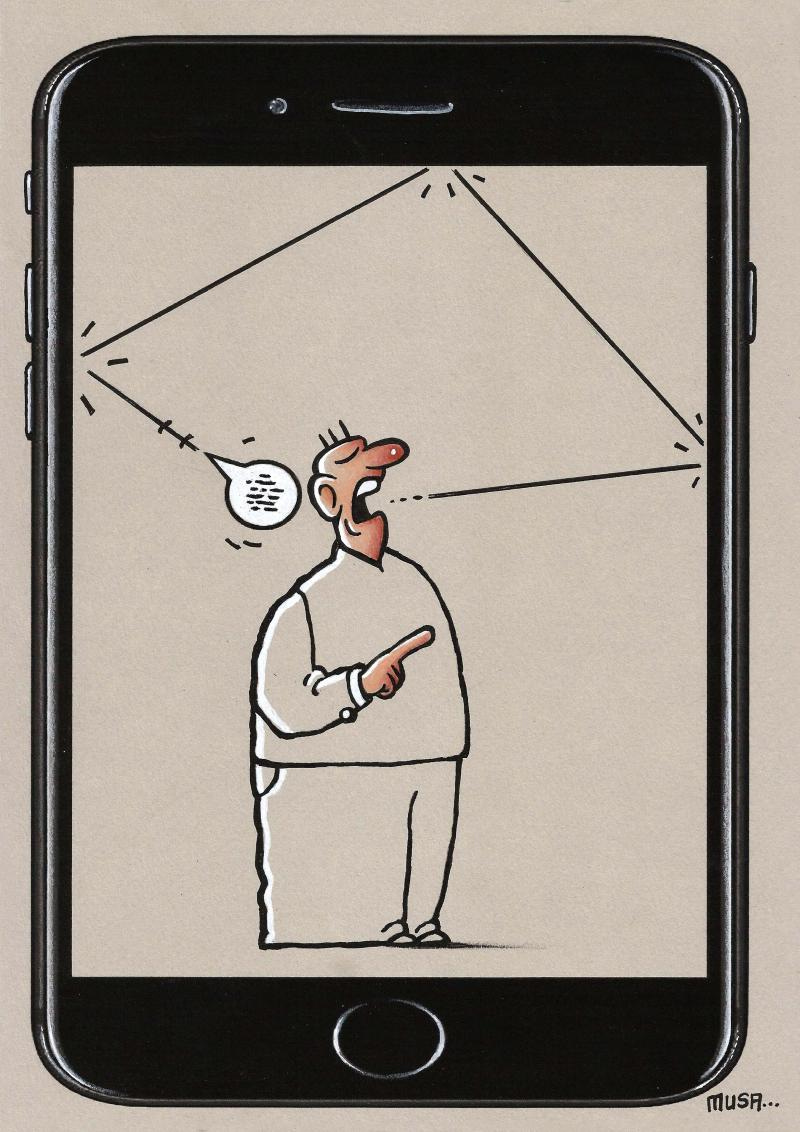

People hate echo chambers. Well, they love to accuse their political and ideological enemies of inhabiting echo chambers while insisting that they themselves obviously dwell in the pure realm of uncontroversial data and valid inference. Basically the same thing. I’m here to tell you that not all echo chambers are bad ones. In fact, from the perspective of gaining and keeping true beliefs it can often be a most excellent thing to inhabit an echo chamber. The hard part is getting into the right one.

Echo chambers are supposed to be a sort of closed epistemic system, in which all the residents of the chamber ping-pong the same beliefs back and forth among themselves, becoming more and more certain that the in-group beliefs are true ones. You know, like “DEI is important and valuable to achieving equality” and “DEI must be destroyed with extreme prejudice.” Stick the first one in a leftist echo chamber and a week later it will be a rainbow bumper sticker on everyone’s Prius. Put the latter into a MAGA echo chamber and the next day Alex Jones will be insisting it’s more true than the moon landing.

I’m going to give a model (admittedly idealized, as every model is) of how echo chambers work. Then I’m going to explain why some of them are good and some of them are bad. A nice bonus of this model is that it predicts both that echo chambers are likely to become more prominent and also that belief polarization will become more extreme. Someone who insists, “boo to echo chambers! Just listen to the outgroup more!” can’t get these benefits.

The EC model

Echo chambers are

Amplifying. Individual beliefs are aggregated and magnified by the group. This is the chamber.

Reflecting. Group consensus is reflected back to individual members, increasing their confidence. This is the echo.

Recursive. Once individuals raise their confidence to better conform with the group, those fresh credences are again amplified. This is how beliefs are repeated, reinforced, and steadily strengthen.

I’m not giving a whole theory of groups here, but it’s pretty intuitive that there are belief clusters that we label and people self-identify themselves under that label: Lutherans, Democrats, creationists, biologists, Bayesians, Zizians, Marcionites, etc. Here’s a modest requirement to be a member of this kind of group: you’ve got to think that there’s a >50% chance that the group is correct about >50% of the beliefs it holds within its domain.

For example, Kris is a member of the epistemic group of biologists only if she thinks it is better than a coin toss that the group is right about most biological beliefs it holds. Even a professional biologist could have a couple of outlier, heretical beliefs, or think the group got it wrong about one thing or another. But if most of her biological beliefs are heretical ones, Kris is not a member of that group.

Amplification

All right, how do we get amplification? Echo chambers are amplifying in that they take individual beliefs and magnify their strength at the group level. For instance, as individuals, most libertarians may be positively disposed to small government, but as a group they are strongly supportive of it. So why are group beliefs more strongly held than any individual ones?

To build an echo chamber we’ll need an explanation for why the opinions of like-minded individuals add up so that the collective group judgment is even stronger than the average member. Fortunately there’s a bit of math that does exactly the work we need: Condorcet’s Jury Theorem, the idea behind the wisdom of crowds.

Condorcet’s jury theorem: Assume (Independence) that individual voters have independent probabilities of voting for the correct alternative. Also assume (Competence) that these probabilities exceed ½ for each voter. It follows that as the size of the group of voters increases, the probability of a correct majority increases and tends to one (infallibility) in the limit.

Condorcet originally used this result to show that a jury was much likelier to get the correct verdict than the average juror. I know it’s also a bit technical, weird, and rather counterintuitive. Here’s an easy example to understand how his theorem works.

Suppose you have an unfair coin. In fact, when you flip it, it comes up heads 60% of the time. Suppose you and your sister decide to flip the coin for the last piece of cake. Being smart, you call heads. There is still a 40% chance your sister gets the cake. Now suppose that instead of a single-flip game, you decide to award the cake on two out of three flips. Heads will win if flip 1=heads and flip 2=heads, or flip 1=heads and flip 3=heads, or flip 2=heads and flip 3= heads. The probability that heads will win in this game is 64.8%. Proof: pr(HH) + pr(HTH) + pr(THH) = (.6*.6) + (.6*.4*.6) + (.4*.6*.6 )= .648. Conversely, the probability that tails will win is pr(TT) + pr(THT) + pr(HTT) = (.4*.4) + (.4*.6*.4) + (.6*.4*.4) = .352. Of course, .648 + .352= 1.

There is a better chance of winning with heads in the two out of three game than with a single toss. What about playing three out of five? In this case there are 10 ways for heads to win, with a total probability of 68.256%. In single toss, the chance of heads winning was .6; in two out of three it was .648, in three out of five it was .68256. The larger the number of tosses the likelier the chance that heads wins. In the infinite limit, the probability that heads wins is 1. Analogously, the more jurors on Condorcet’s jury that tend to vote in one direction, the more likely it is that the jury as a whole will vote that way.

The real power of Condorcet is that it drives to unanimity in group judgment. If most members of a group lean towards some claim, the group as a whole will endorse that claim more strongly than the average member. The larger the group (as with the larger number of coin tosses), the more probable it will judge it to be. For example, if most individual Democrats think that gun control is a good idea, your neighborhood Democrats will collectively think it is a very good idea, and Democrats as a whole will be certain that gun control is a good idea.

The first property of echo chambers is that they are amplifying: individual credences are aggregated such that group-level credence is higher. Condorcet’s jury theorem shows how to get amplification: it produces group confidence that exceeds average individual confidence. Now we have groupthink, but not yet echo chambers.

Reflection

The second property of echo chambers is that they are reflecting. For that we need a means of mirroring group consensus back to believers, increasing their confidence. I don’t think there’s a great mystery about how we learn about peer group consensus. A trusted fellow Catholic tells you, “here’s the latest about the ordo amoris from Pope Francis.” Or maybe you read Catholic blogs or follow the Pope on Facebook. Or even all your co-religionists are talking about the topic after mass. But why should this affect what you believe?

From the psychological perspective it’s long been known that people routinely alter their judgments to conform with the group. In Solomon Asch’s classic experiments in the 1950s, about a third of experimental subjects judged lines of obviously different lengths to be the same length when a majority group did so. As far as I can tell from the psych lit, Asch’s basic findings about peer pressure have held up pretty well since then.

There’s a more recent study by Martin Tanis and Tom Postmes indicating that people will trust other identifiable members of their in-group without having to know any other particular characteristics, a courtesy not extended to those outside the group. So a self-identified Republican will tend to trust someone simply because they know that person is a fellow Republican (and will expect reciprocal trust in return), but a Democrat will have to personally demonstrate their bona fides before earning trust from a Republican.

We tend to trust the in-group and we’re subject to believing things because of peer pressure. This is not shocking information, for sure, but it gives support to the idea that in fact people do become more confident in what they believe because of group consensus.

But should they be increasing their confidence? Maybe that’s a bad idea.

Actually, I think it’s rational to be more sure of what you believe because that’s also what the group thinks. Part of the reason comes out of research into peer disagreement. Suppose you disagree with someone you think is your intellectual equal when it comes to being informed, reasoning ability, fair judgment, etc. The disagreement doesn’t have to be about any kind of hot-button issue; maybe you disagree about how to fairly split the group bill at a restaurant. Like every niche dispute in philosophy, there’s a massive discussion on this topic.

One popular solution is this: you should adopt the outside, third-person perspective on the disagreement. In the restaurant bill example, a third party would give equal weight to your judgment about how to split the bill and your interlocutor’s judgment. From the external perspective, both judgments are equally reasonable and they should each be given equal weight. The inside, first-person perspective is not importantly different from the outside, third-person perspective, so you too should give equal weight to the view of your opponent. Bayesians will say you should take this new information and update your judgment about the posterior probability of the correct way to split the bill. When two individuals disagree, both parties should be far less confident in their own judgments, adjusting their own view to more closely align with the other until they reach convergence.

What’s all this have to do with echo chambers? Suppose you’re 70% sure that raising local school taxes is a good idea. Then you find out that your peer group is 90% sure that raising the taxes is a good idea. This is a kind of peer disagreement, namely over how confident you should be. If you adopt the equal weight/outside view of the preceding paragraph, you should update your assessment and be even more confident about raising taxes. Knowing what your peers think should rationally make you more inclined to agree with them—after all, you’re not any smarter than they are.1

Recursion

The final step in building an echo chamber is also the easiest, given the accounts of amplification and reflection above. That is recursion. After everyone has updated their confidence via reflection, and are more convinced than ever that some claim is true, feed that new, higher individual confidence back into Condorcet’s amplification mechanism. Now the collective group confidence is even higher than it was before, as it again amplifies individual credences. Once again take the new group confidence and reflect it back to individual group members. Again individual confidence gets closer to the group.

For example, suppose your epistemic group is composed of 100 people, each of which is 55.2% convinced that claim C is true. Now amplify. By Condorcet’s Jury Theorem, collectively your epistemic group is 80% sure that C. After reflection, you revise upwards to more closely align with the group. Suppose you are now 56.5% sure that C. Everyone else in the group does the same. After another round of amplification the group has 90% confidence that C.2 Once you see that the group confidence is so high, you use reflection to revise your personal credence upwards again. In the limit, group and individual confidence converge to 100%. This back-and-forth ratcheting upwards is an echo chamber.

When echo chambers are good

One benefit of my model is that it correctly predicts that polarization will get worse as intra-group communication improves. When any like-minded collection of people forms an epistemic community and share their beliefs with each other, amplification will ensure that the group collectively holds such beliefs more strongly than the average member. Those beliefs could be true, false, or even nonsense, but once the echoing mechanism gets started, everyone in it will become increasingly convinced.

When it comes to topics that are political, religious, or otherwise unsettled and controversial, we should expect a diversity of epistemic groups: those who believe that transsexual women are really women and those who believe they are deluded men, those who maintain racism is a serious problem and those who aver that it is overblown, those who advocate a massive redistribution of wealth and those who think taxation is theft. All will eventually be completely certain of their views within an echo chamber.

In the past, it was more difficult for those with fringe beliefs—the earth is flat, the Illuminati secretly run the government, the Sandy Hook mass shooting was a hoax—to effectively form an epistemic group because they were relatively few and far between. With the internet and social media, it is now easy to find your fellow conspiracy theorists and exchange ideas. In addition, it is far easier to silo your information feed when so much of it is conducted in virtual interactions.

On my view of echo chambers, they are an automatic outcome of amplification and reflection, and the acceleration to maximum credence gets faster when recursion speeds up. This is just what Brugnoli et al. find: social media allows for quicker feedback from one’s epistemic group as well as allowing faster group aggregations of beliefs. Because social media accelerates recursion in an echo chamber, and there is a diversity of echo chambers across a range of opinions, we drive swiftly towards a more pronounced polarization of beliefs.

Everybody thinks that whatever echo chamber they are in is a good one. But sometimes they are right! Suppose you start off 60% sure that anthropogenic climate change is a real thing. Then you find out that all your trusted peers think the same thing. Amplification means the group as a whole is even more confident that climate change is real. Then you find out about the higher group confidence and update your own judgment. Now you’re 70% sure. Rinse and repeat and boom, you’re at 100% psychological certainty before you know it. Why is this good? Because you’re 100% sure of something that is actually true. If you are certain of the truth, it is proper to disregard “evidence” to the contrary. There is no value in taking seriously sophistical arguments or spurious data.

Echo chambers are not a bug in group reasoning, but a feature. They are practically automatic once certain conditions are satisfied; they aren’t a failure of any kind, but rather a completely predictable, rational, logically expected outcome. That doesn’t mean they lead to good results; phantom traffic jams arise even when all drivers are doing the best they can and following the rules of the road. Echo chambers gone bad are a kind of logical traffic jam, where you can start mildly persuaded in dubious claims, and proceed step by reasonable step to total conviction in falsehoods.

In the end, echo chambers are the outcome of fundamentally rational forces in group reasoning: amplification, reflection, and recursion. The computer programming cliché of “garbage in, garbage out” applies here as well—when an echo chamber gets started with bad information or even abject nonsense, it will build in intensity until its members are walled off from the truth. It is tempting to see those trapped in such circumstances as stupid or wicked, but that is a mistake.

Echo chambers are not inherently pernicious, and we would all do well to be ensconced in ones that promote the truth. Certainly many echo chambers are bad ones, but we should not expect a general solution to the bad ones any more than we should expect a general solution to prisoner’s dilemmas or other problems of suboptimal equilibria. All we are going to get are strategies and piecemeal approaches. The problem is how to make sure we are in the right echo chambers, when everyone already thinks their own epistemic group is likely to be correct.

Aumann’s Agreement Theorem gives additional, related, support for modifying one’s credences in light of learning about peer judgment, but I thought a discussion here would be too long and technical. A well-informed popular discussion is here. Also, you might not endorse the equal weight view, but can still acknowledge it is a reasonable approach to peer disagreement. That’s all I need.

Great article, thanks for sharing. I found your comments on the 'Recursion' phase of your model especially interesting. Nuanced viewpoints or the state of being undecided are phenomena which only can exist in the realm of the individual. Groups, by nature, seem to evoke rapid consensus.

The platforms of the American Democratic & Republican parties aren't exactly rigid political philosophies like Marxism or Anarchism. It has always surprised me that they each seem to come to a consensus so rapidly even across wildly different domains. Almost without fail, the consensus each party reaches is inevitably diametrically opposed to that of the opposing party. Thinking in terms of your model, I wonder if those in American politics might also participate in a sort of negative cycle in which they harden themselves against the viewpoints of their opponents.

This reads brilliant to me. Upon reflection, however, I would have two queries, which may qualify your conclusions:

- The amplification property of the echo chamber is derived from Condorcet’s theorem. The ‘voters’ in Condircet’s theorem are supposed to reach each their verdict independently, aren’t they? What about the case in which voters are prone to ‘contagion’, eg from a charismatic leader or simply a ‘miraculous’ event? These are often described as cases of ‘madness’ as opposed to ‘wisdom’ of the crowds.

- Relatedly, your illustration of the working of the echo chamber seem to presuppose that individuals choose to belong to the echo chamber that reflect their beliefs. What about the case in which individuals choose their beliefs in order to belong to an echo chamber?

I am not sure how these arguments should qualify your conclusions but my intuition is that they should lead to a more pessimistic view of the phenomenon.